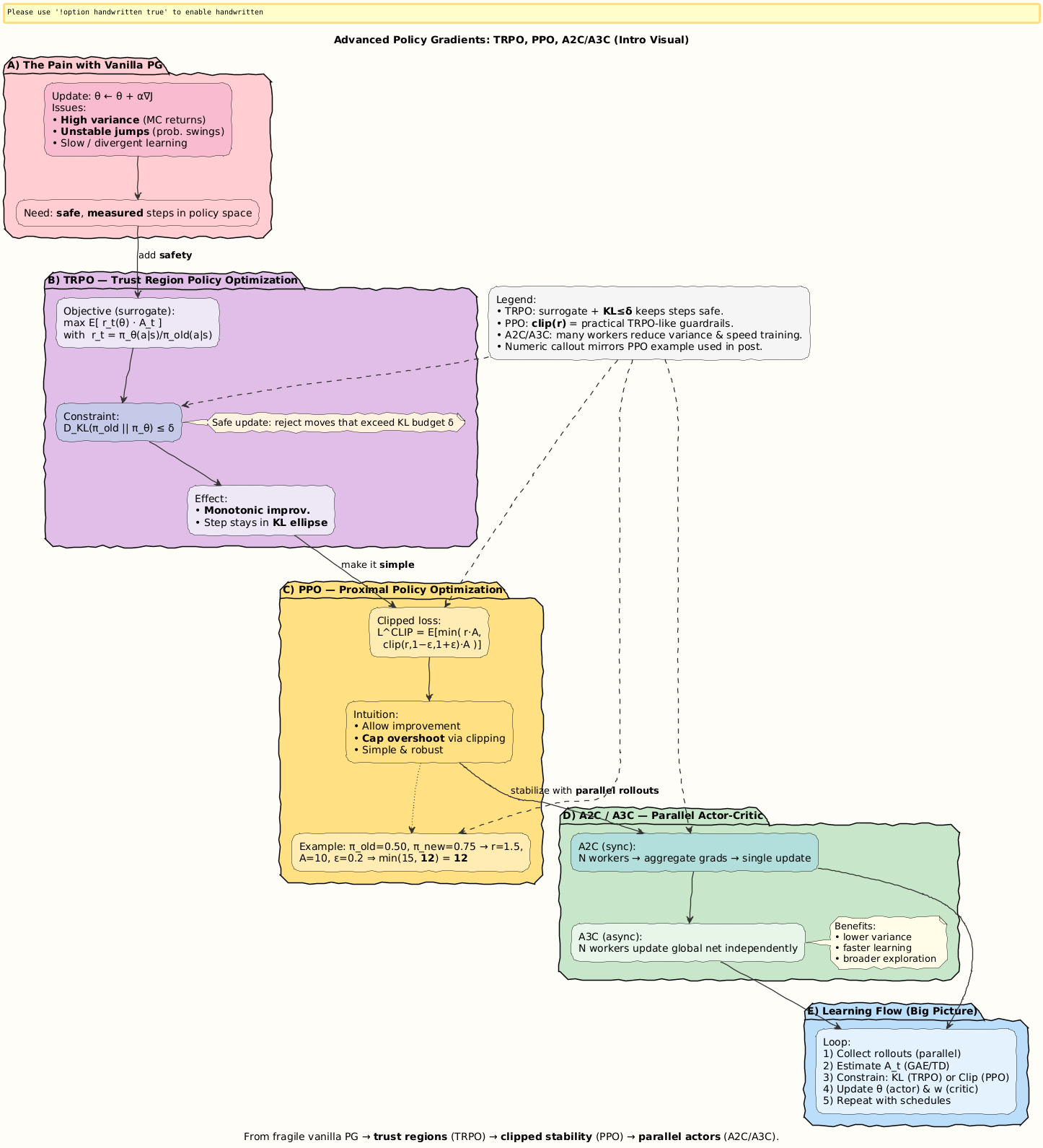

Advanced Policy Gradient Methods: From Fragile to Practical Reinforcement Learning

The Story: From Stumbling to Mastery

When researchers first used policy gradients, they were fascinated: “Wow, we can directly optimize behavior!”. But very quickly, frustration set in.

Sometimes the agent’s policy would swing wildly, going from cautious to reckless in one update.

Sometimes learning was so slow and jittery it never stabilized.

Other times, updates that should have been good actually made things worse.

It was like trying to teach a toddler to walk uphill:

Too big a step → they stumble and fall.

Too small a step → they never leave the same spot.

What the field needed were methods that could let policy gradients take measured, reliable steps uphill.

That’s where TRPO, PPO, and A2C/A3C enter the story.

Visual:

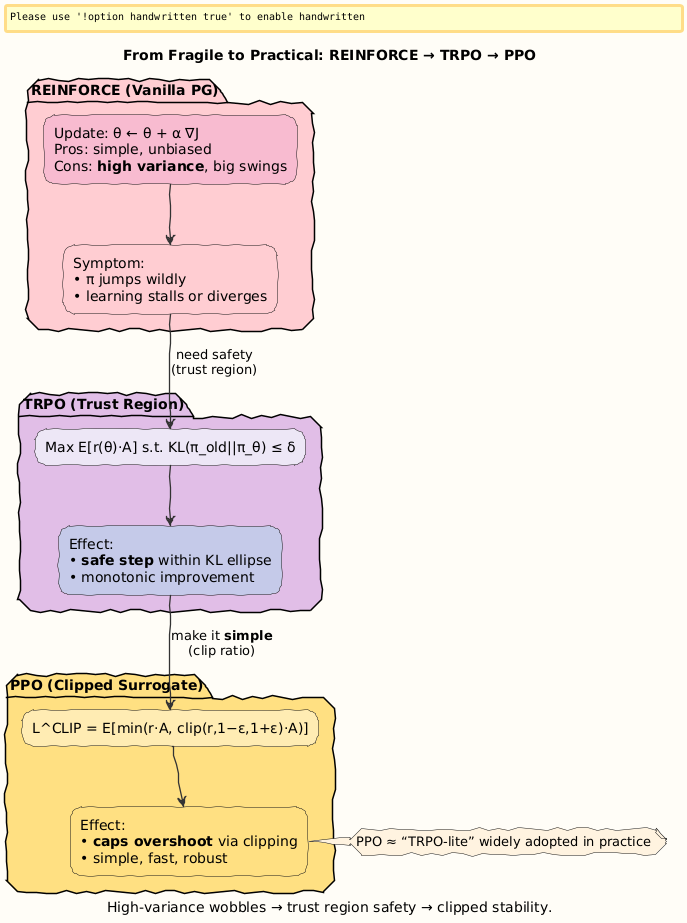

Step 1. The Problem with Vanilla Policy Gradients

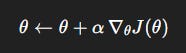

The vanilla update rule is:

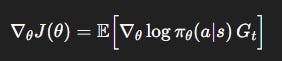

where

Two issues emerge:

Unstable jumps: A big gradient can radically change the policy distribution in one update.

High variance: Monte Carlo returns

G_tfluctuate heavily, producing noisy gradients.

Result: learning curves full of spikes, divergence, or stagnation.

Step 2. TRPO: Trust Region Policy Optimization

Imagine holding a child’s hand as they climb. You don’t let them wander too far from their previous step.

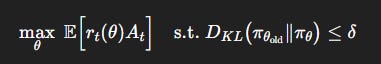

TRPO formalizes this with a constrained optimization:

Where:

This guarantees monotonic policy improvement, each update won’t undo past progress.

Visual:

Numerical Example 1: TRPO Ratio with KL Constraint

Suppose:

Old policy probability for action

a:π_old(a|s)= 0.4.New policy probability:

π_new(a|s)= 0.5.Advantage:

A= 2.Trust region

δ= 0.05.

Step 1: Compute ratio

Step 2: Surrogate objective term

Step 3: KL check

Approximate KL between π_old and π_new = 0.02 (< δ).

Update accepted: within trust region, improvement valid.

If KL > 0.05, update would be rejected.

Interpretation: TRPO ensures we never take an over-aggressive leap that destabilizes learning.

Visual:

Step 3. PPO: Proximal Policy Optimization

TRPO was elegant but computationally heavy (required solving a constrained optimization).

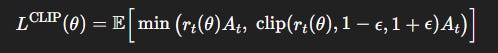

PPO simplified it with a clipped surrogate objective:

Key ideas:

Ratio

r_t (θ)still measures how far we moved.Clipping ensures updates don’t push policy too far from old.

Simpler, faster, widely adopted.

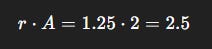

Numerical Example 2: PPO Clipping

Suppose:

π_old(a|s)= 0.5.π_new(a|s)= 0.75.Advantage

A= 10.ε= 0.2.

Step 1: Ratio

Step 2: Candidate terms

Unclipped =

r · A= 1.5 · 10 = 15.Clipped ratio =

min(max(0.8, 1.5), 1.2) = 1.2.Clipped = 1.2 · 10 = 12.

Step 3: Surrogate loss

Interpretation: Without clipping, policy would jump too far (15). PPO caps it at 12, ensuring controlled growth.

Visual:

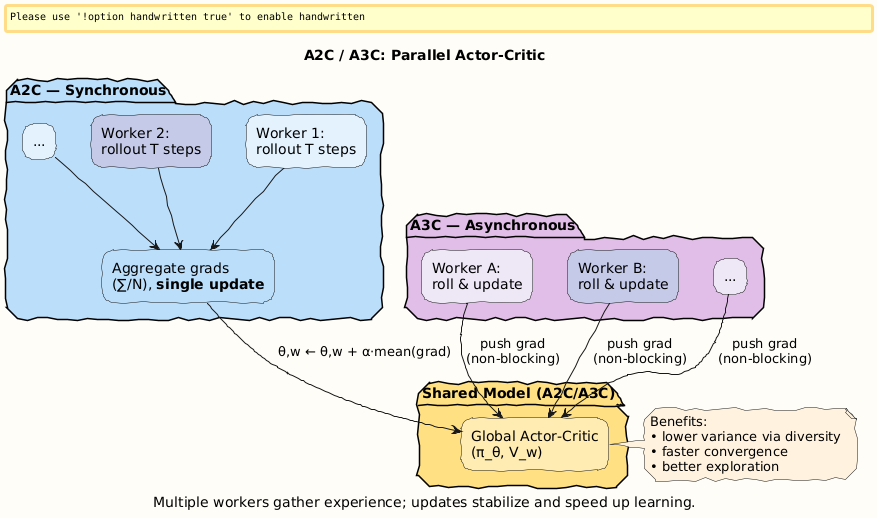

Step 4. A2C and A3C: Parallelism

Another issue: variance from limited trajectories.

Solution: run multiple environments in parallel.

A2C (Advantage Actor–Critic): synchronous, gather experiences from

Nworkers, average gradients, update together.A3C (Asynchronous Advantage Actor–Critic): asynchronous, each worker updates global parameters independently, faster but noisier.

Advantages:

Faster convergence.

More stable updates.

Broader state exploration.

Step 5. Why These Methods Changed Everything

TRPO: Introduced trust regions, gave theoretical guarantees.

PPO: Clipped surrogate objective, simple and practical.

A2C/A3C: Parallelism that made deep RL feasible at scale.

These methods powered Atari agents, robotics, MuJoCo control tasks, and even OpenAI’s early work on language models.

They transformed policy gradients from fragile toys to the backbone of modern RL.

Special: Learn More in Learning to Learn: Reinforcement Learning Explained for Humans

For detailed derivations, proofs, and additional worked problems, check my book:

Learning to Learn: Reinforcement Learning Explained for Humans

What you’ll find inside:

TRPO: mathematical derivation of constrained optimization.

PPO: clipping with worked examples (step by step).

A2C/A3C: how parallelism stabilizes training.

Bridges to modern PG methods like PPO2, SAC, and DreamerV2.

Get it here:

Closing

Vanilla PG was unstable.

TRPO: introduced trust regions (safe zones).

PPO: clipped objective, practical and popular.

A2C/A3C: parallelism to reduce variance.

We worked two numerical examples:

TRPO ratio check (2.5 improvement, accepted).

PPO clipping (clipped from 15 → 12).

Together, these methods turned policy gradients from fragile experiments to the workhorse of deep RL.

Next: Exploration in Policy Gradient Methods: entropy bonuses, curiosity-driven signals, intrinsic motivation.

Follow and Share

You can follow me on Medium to read more: https://medium.com/@satyamcser

#ReinforcementLearning #PolicyGradients #TRPO #PPO #A2C #A3C #TrustRegion #ClippedObjective #ActorCritic #DeepRL #AIExplained #MLMath #satmis